Scientific hiring

How hiring managers review portfolios, resumes, and choose whom to invite for a job interview. Research results.

As a former design manager who managed a team of 35+ product designers and hired them personally, I was looking for a quantifiable and scientific way of determining what makes a good application. Also, over the years, I helped several companies with their hiring efforts. I've heard all kinds of opinions and discussions on the matter, but there was never any data to back up the claims people made. I decided to do some research and designed an experiment so that there would be a quantifiable result. At the end I've trained a simple ML model that predicted if an application was in line with "good application" or not (with 75% accuracy).

Abstract

I gathered the applications of 243 real designers actively looking for a job and enlisted the help of 16 hiring managers who agreed to rate the applications as suitable for an interview. At least seven hiring managers evaluated each application.

I timed the evaluation of 1,700 reviews and found that it takes less than one minute for a hiring manager to make a decision about an application and portfolio. This shows how important it is to make a strong first impression and effectively communicate your skills and experience quickly.

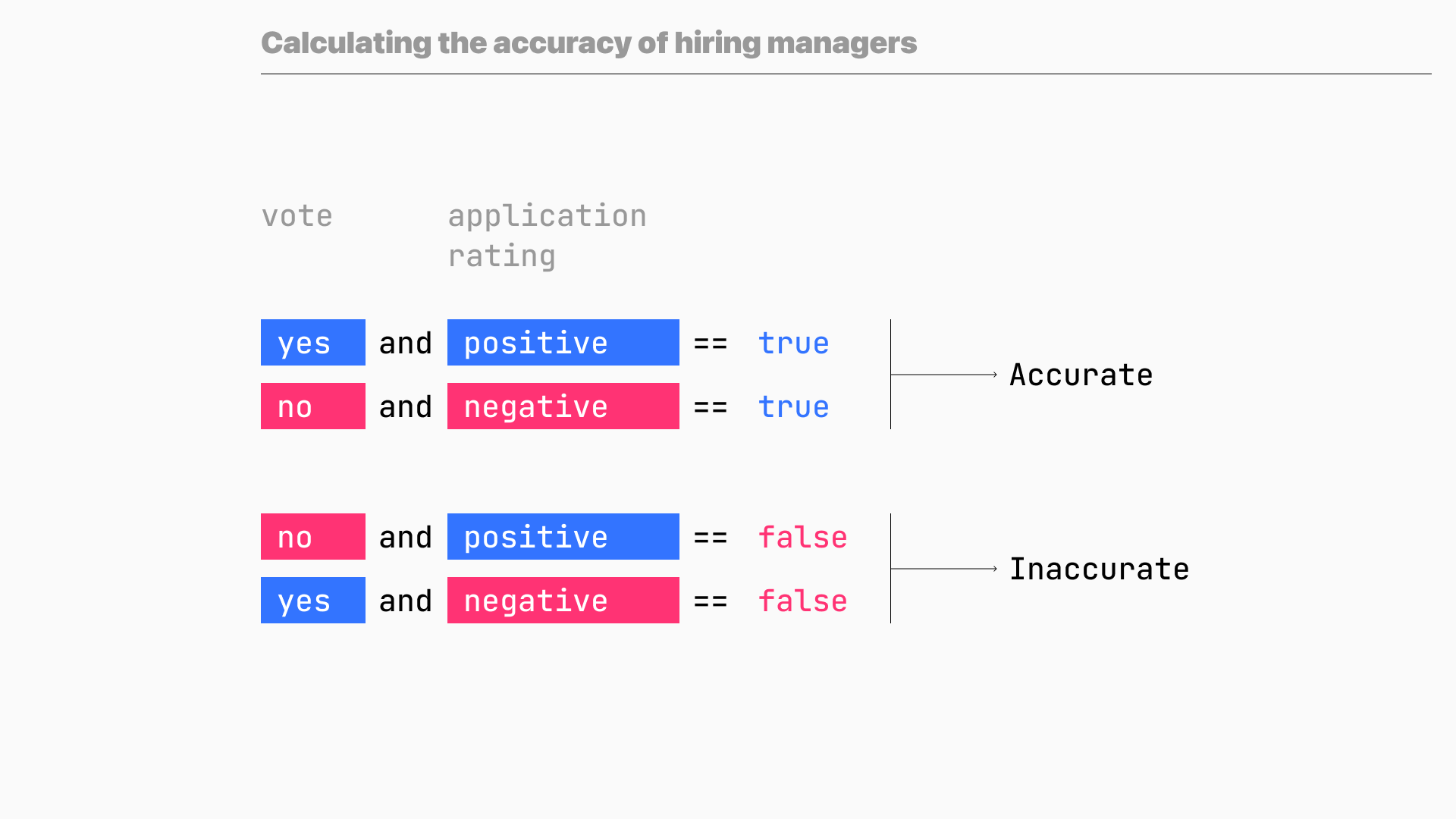

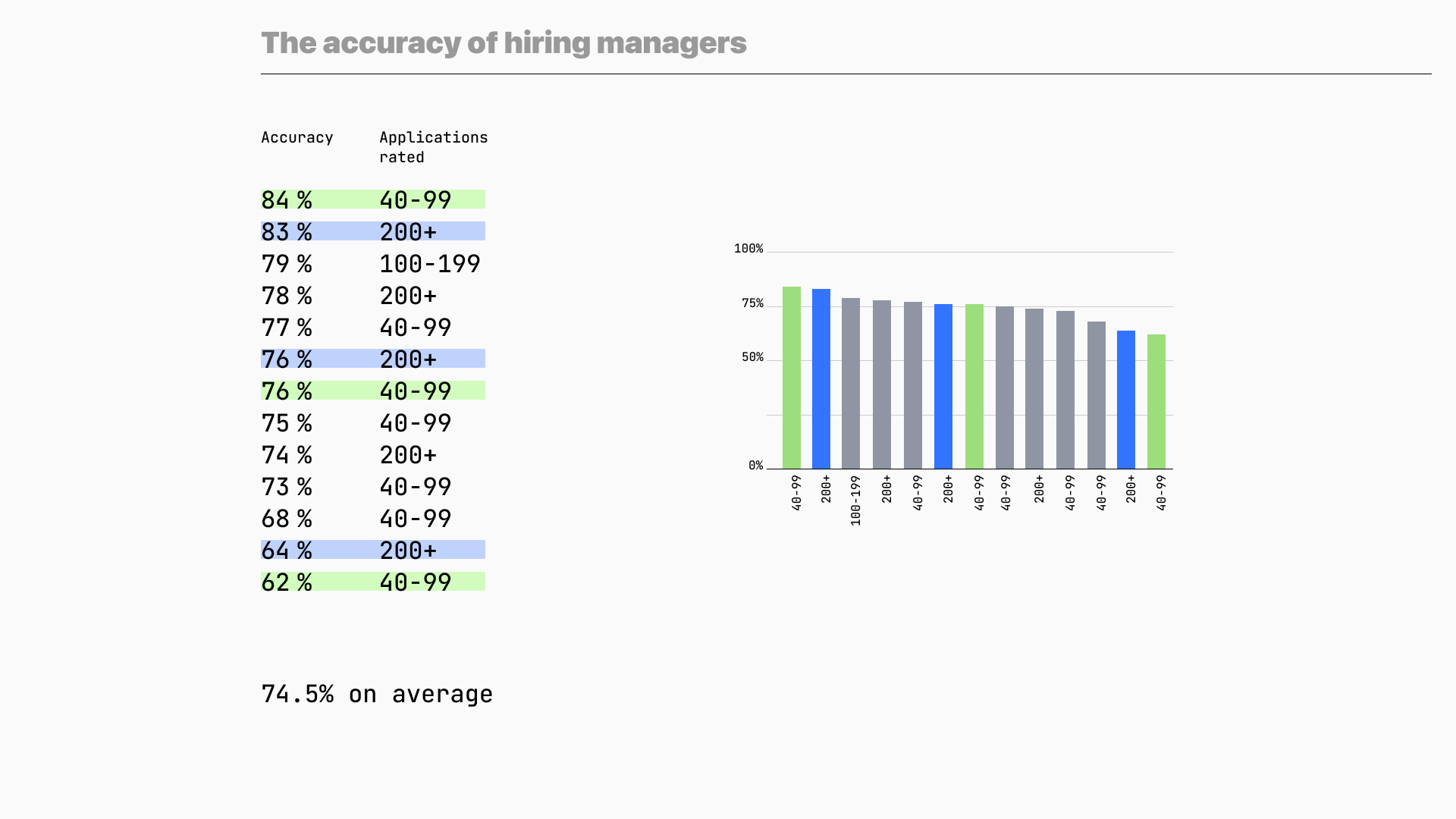

I also looked at the accuracy of the hiring managers themselves. The most accurate manager had an accuracy rate of 84%, while the least accurate had a rate of 62%. On average, the hiring managers had an accuracy rate of 75%, which leaves room for mistakes. By using a standardized evaluation process and involving multiple hiring managers (or a team), companies can make more informed decisions, identify the fittest candidates for the job, and reduce the number of possible mistakes.

I talked to hundreds of candidates, and now and then, they wonder why wouldn't a hiring manager or recruiter reach out to them and spend more time on a candidate. Some candidates think that a hiring party should spend more time exploring the candidate's background. However, when candidates tried filtering through piles of applications, they learned that it actually makes sense and understand better how to apply (empathy).

Overall, the research provided valuable insights into what makes a successful job application and the potential of using data-driven methods to improve the hiring process.

Experiment design

- The study engaged 16 hiring managers from product companies like Intercom, Arrival, Revolut, Arconis and Miro. All of them were actively hiring.

- There were 243 applications in the study. A cover letter was mandatory, a link to a portfolio was optional.

- At least seven managers rated each application.

Rating applications

Hiring managers could see five applications at a given moment. After rating a batch of five applications, they could proceed to the following five. It was a deliberate design choice to make sure we have all applications rated. In the real life, managers might have looked at a wall of applications and filtered through them (e.g., only those with portfolios and so on.) My goal was to learn what makes a good application, so the UI was a limiting factor.

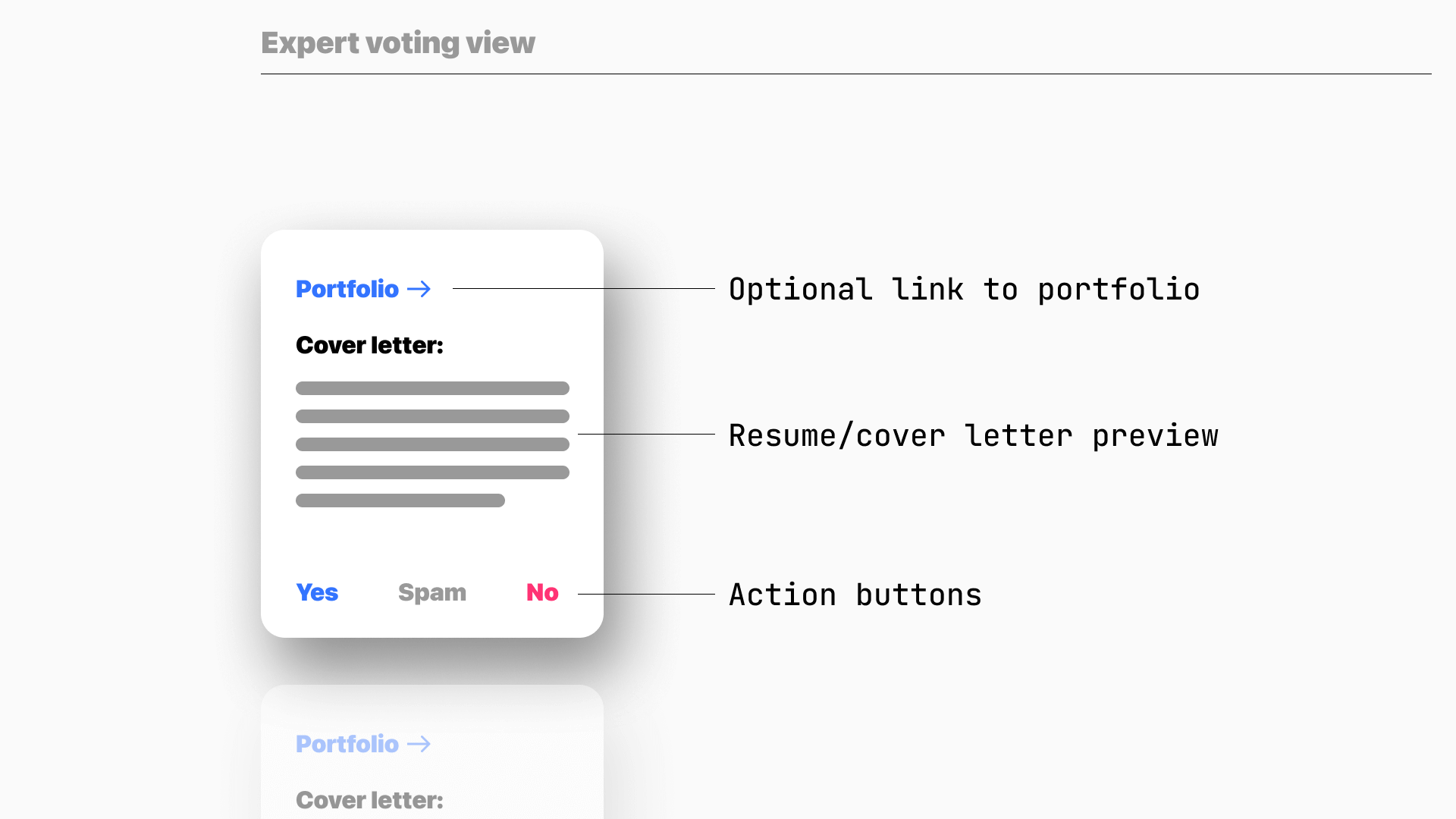

Inbox emulator

Hiring managers saw a feed with applications. They could tap on the portfolio link (if it was available), see the preview of an application, and vote. The managers were assigned to applications randomly, and their evaluations came in one of three forms: YES, NO, or SPAM. A YES meant the designer was selected for an interview, while a NO meant they were not.

Measuring application success

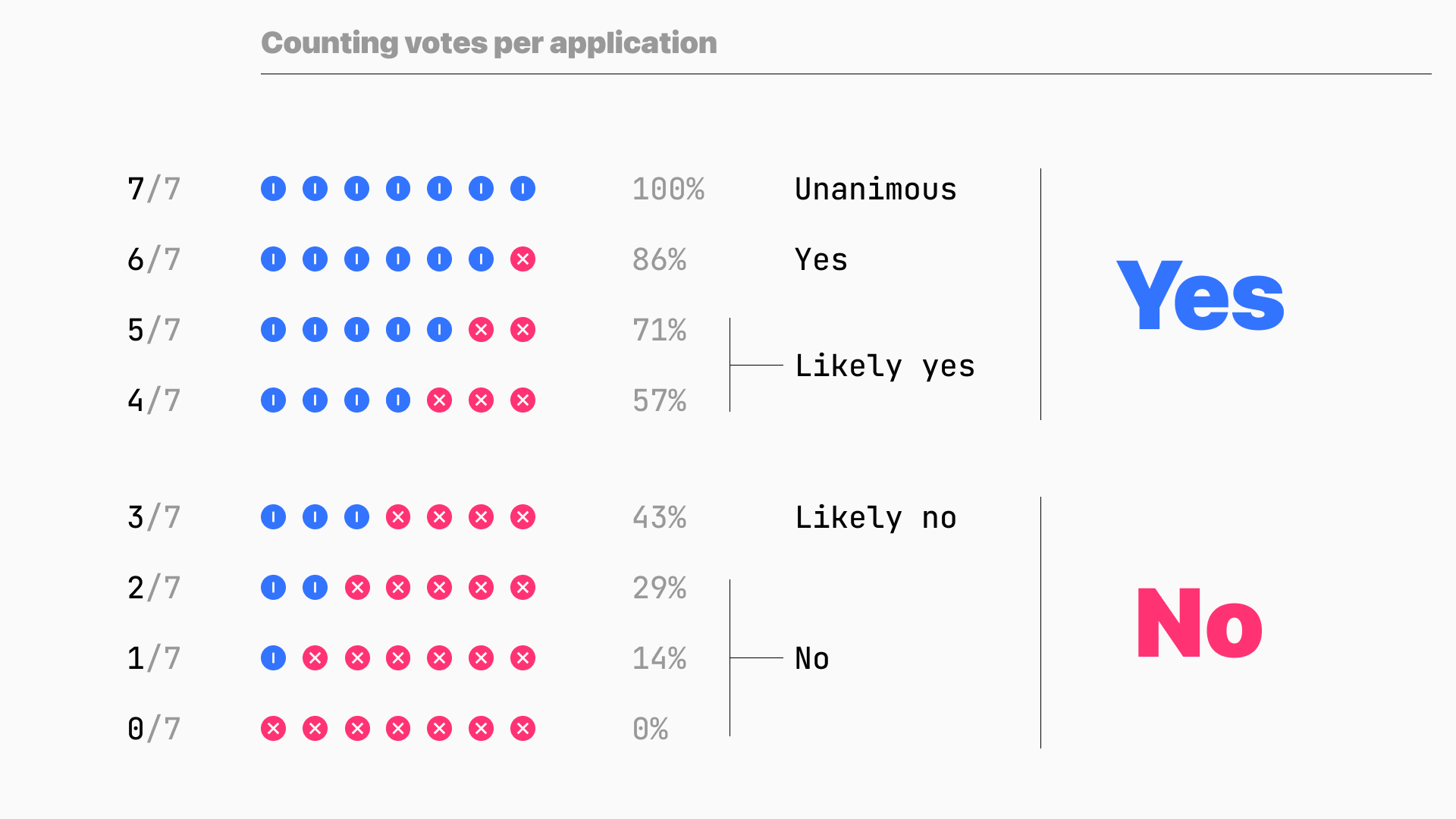

We scored each YES as a +1 and each NO as a -1, and the resulting score indicated the strength of the decision.

Counting the weight of an application

Having multiple ratings for each application helped smooth out any misplaced decisions and allowed us to evaluate both applications and the accuracy of the hiring managers. We scored the managers based on how aligned their votes were with the majority, and if their rating was in line with the consensus, we considered it to be accurate.

Applications distribution and results

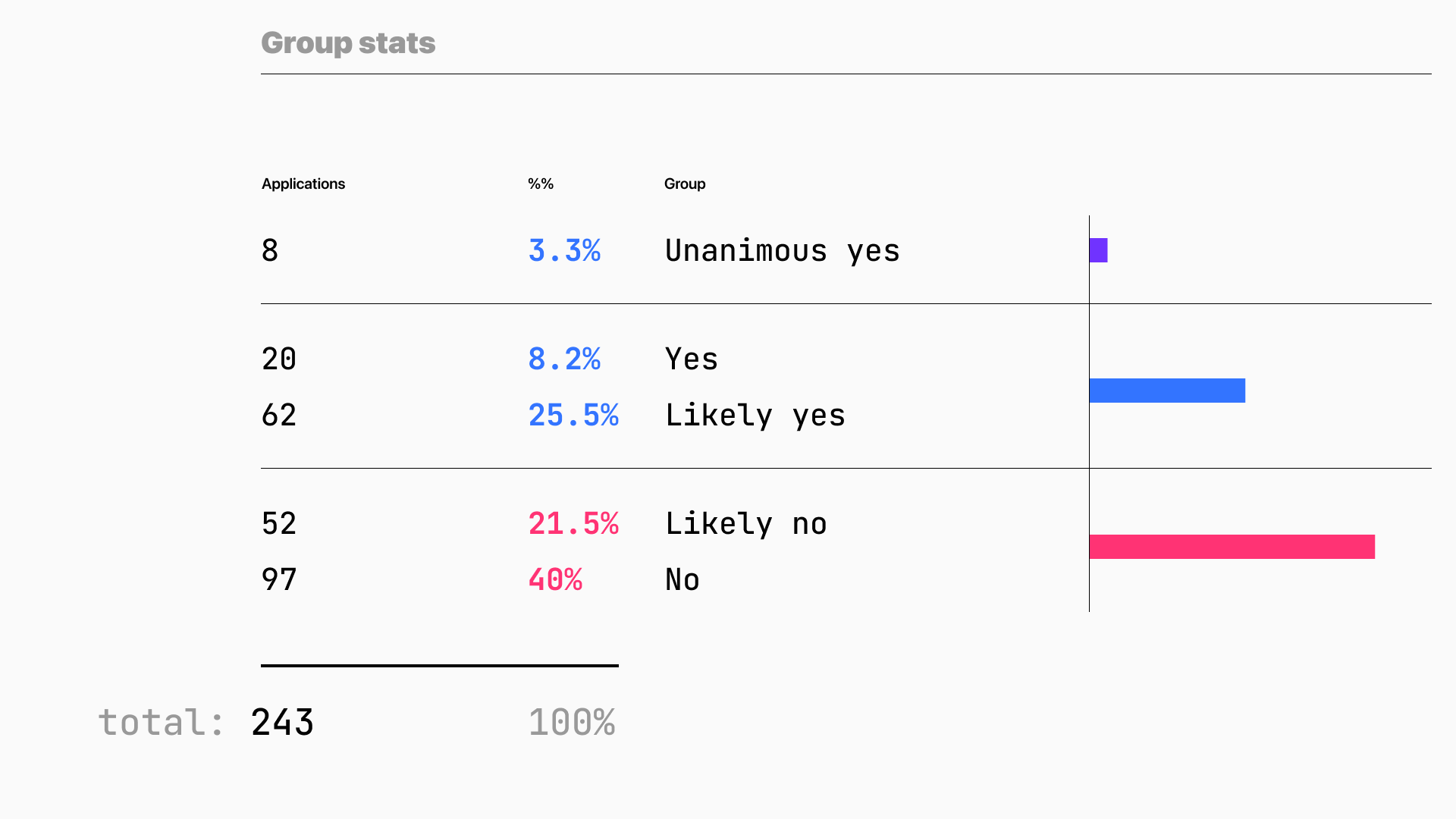

Here is how 243 applications (100%) landed into six groups after I processed all the votes:

Common features of successful applications

I measured the average length of an application and how long it took to rate an application within a group. There was no significant correlation between the length of an application and the median time between groups. E.g., one application from the "Unanimous yes" group was 538 words long (compared to the 276 average).

What makes a good application

- A link to a portfolio

- Facts about launched projects and results

- Work done in the last two years (recent experience)

- Emphasized or obvious experience of working in a product team

Consider mobile, seriously

I've got feedback from four managers that they were reading applications from mobile devices and had a hard time opening portfolios that were not optimized for mobile (desktop-sized only). This is something designers should seriously consider when building their portfolios.

Hiring managers' accuracy

Once I had all groups calculated, I averaged all applications ratings to binary option: votes with ratings of 4 out of 7 and above were marked as a YES and the rest as a NO.

I compared all ratings each manager did against the weighted application's ratings. I counted each coinciding instance and got the ratios. If the manager's rating was in line with the majority vote, that manager's rating was considered accurate.

How accurately managers ranked applications?

Since managers rated different amounts of applications, I thought it was important to see if the number of votes influenced the stat in any way. Accuracy follows the normal distribution, and managers that have voted less can be found at the top and the bottom of the chart. The same works for folks with 200+ votes.

I have excluded those who rated less than 40 applications because their stats would have been insignificant and could affect the average accuracy.

Why accuracy matters?

Depending on the hiring policy and processes, it is important to consider if one person or a team performs the initial filtering. We can extrapolate from the experiment that, on average, there is a 25% probability of weeing out good candidates or letting the least fit in.

More insights

After conducting the research, I invited designers to rate each other's applications. Almost everyone opted in, and most of them rated 30 applications and more.

I analyzed new data and found out that about 5% of participants' accuracy was on par with hiring managers' (70% and more).

I contacted 17 participants that were willing to hop on a call and talk about their experience and learned that reviewing other participants taught them more about themselves than they expected. First, they started recognizing good and bad patterns. Second, they learned how to rewrite their own applications to avoid these bad patterns and even how to adjust their career paths. Last, they realized how hard it is to be on the side of a hiring manager (or a recruiter) and to make these decisions about applications and how similar they usually are.

These insights led me to test more hypotheses on how to automate hiring processes and how to help designers with their careers. Eventually, I started working on peer-to-peer reviews mechanics, where designers could practice their presentations with each other before applying to real jobs. The initial results showed the same outcome: designers learned from each other's presentations more than just from practicing their own.